Storage Review has been writing about the Mt. Diablo initiative, backed by Meta, Microsoft, and the Open Compute Project. The scheme aims to lock down interface standards at +/-400VDC, a far cry from legacy 12VDC or even the more recent 48VDC setups.

Google, once the torchbearer for 48VDC, now calls this higher-voltage move “a pragmatic shift” that “frees up valuable rack space for compute resources by decoupling power delivery from IT racks via AC-to-DC sidecar units.” The company reckons the design wrings out an extra three per cent efficiency.

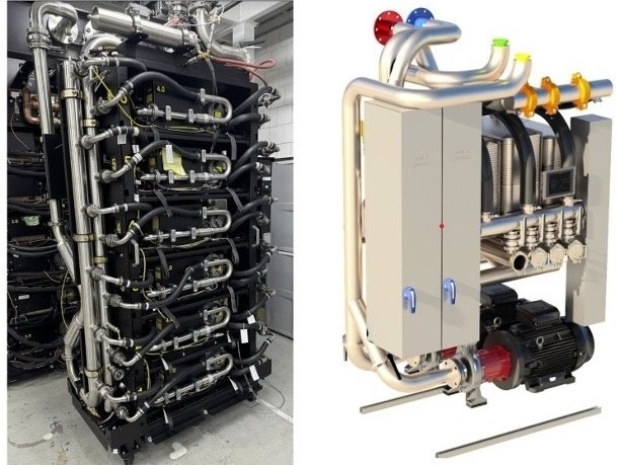

Cooling is no less critical. As chip density spikes, traditional fans are barely making a dent. Liquid cooling has become the only scalable option left, and Google has gone all in. Its TPU pods now rely entirely on liquid systems, and the company claims it has delivered 99.999 per cent uptime over seven years.

These new setups swap bulky heatsinks for cold plates, slashing server footprints in half and quadrupling compute density. But even as the tech impresses, the 1MW-per-rack dream carries risks. It’s pinned to an assumption that demand will keep surging, yet projections like Google's 500kW-per-rack by 2030 are far from guaranteed.

And bolting high-voltage EV tech into data centres brings its own quirks, especially around safety and repair complexity. You don’t want to be poking around 400 volts in a dark server room with a hangover.

Still, the collaboration between hyperscalers and the open hardware mob shows one thing clearly: the old playbook is toast. With AI chips now sucking more than 1,000 watts each, the days of breezy air cooling are numbered.